Abstract

Consistency models have recently been introduced to accelerate the generation speed of diffusion models by directly predicting the solution (data) of the probability flow ODE (PF ODE) from initial noise.

However, the training of consistency models requires learning to map all intermediate points along PF ODE trajectories to their corresponding endpoints.

This task is much more challenging than the ultimate objective of one-step generation, which only concerns the PF ODE's noise-to-data mapping.

We empirically find that this training paradigm limits the one-step generation performance of consistency models.

To address this issue, we generalize consistency training to the truncated time range, which allows the model to ignore denoising tasks at earlier time steps and focus its capacity on generation.

We propose a new parameterization of the consistency function and a two-stage training procedure that prevent the truncated-time training from collapsing to a trivial solution.

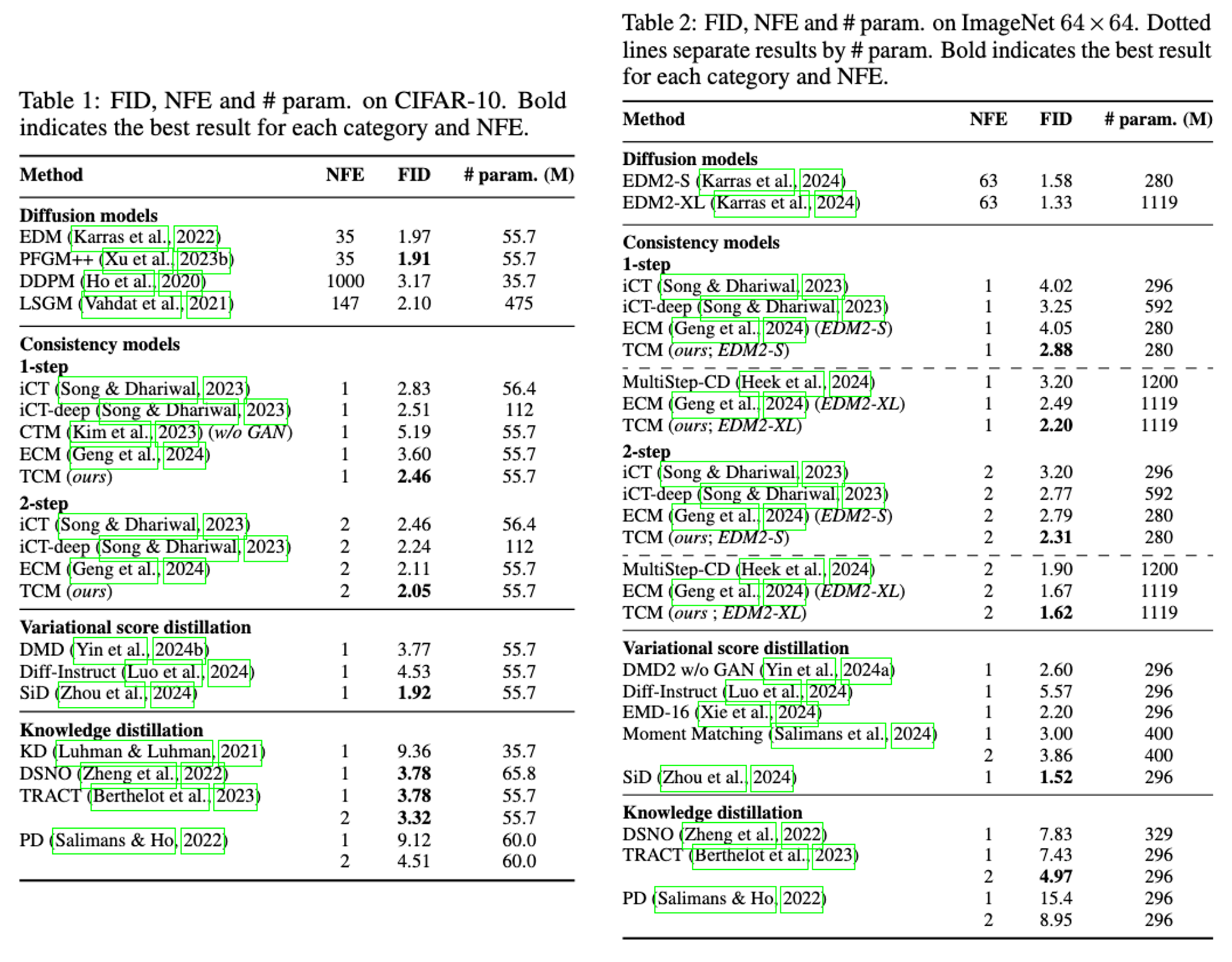

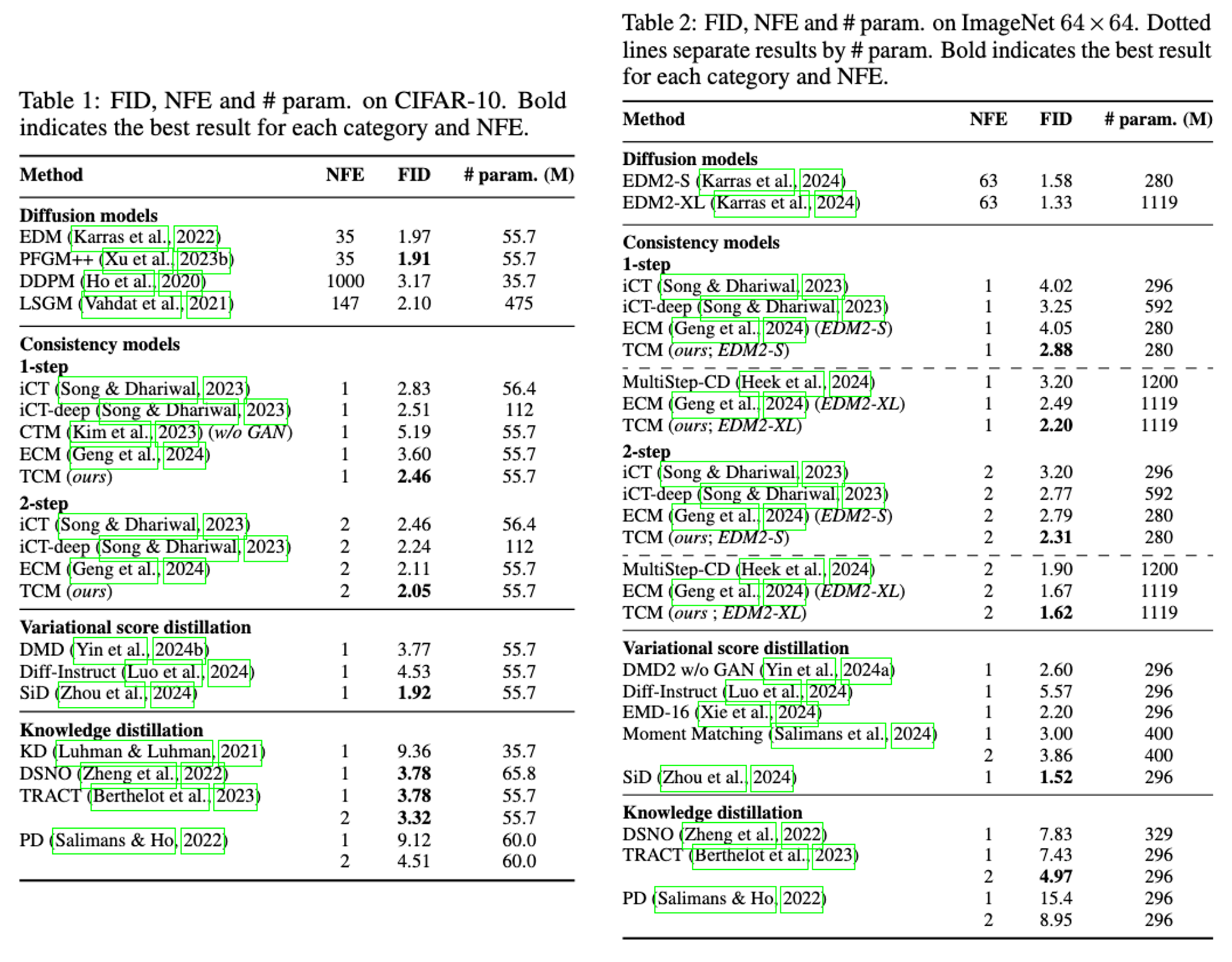

Experiments on CIFAR-10 and ImageNet 64x64 datasets show that our method achieves better one-step and two-step FIDs than the state-of-the-art consistency models such as iCT-deep, using more than 2x smaller networks.

Key Results

TCM improves both the sample quality and the training stability of consistency models across different datasets and sampling steps. On CIFAR-10 and ImageNet 64x64 datasets, TCM outperforms the iCT, the previous best consistency model, in both one-step and two-step generation using similar network size. TCM even outperforms iCT-deep that uses a 2x larger network across datasets and sampling steps. By using our largest network, we achieve a one-step FID of 2.20 on ImageNet 64x64, which is competitive with the current state-of-the-art.

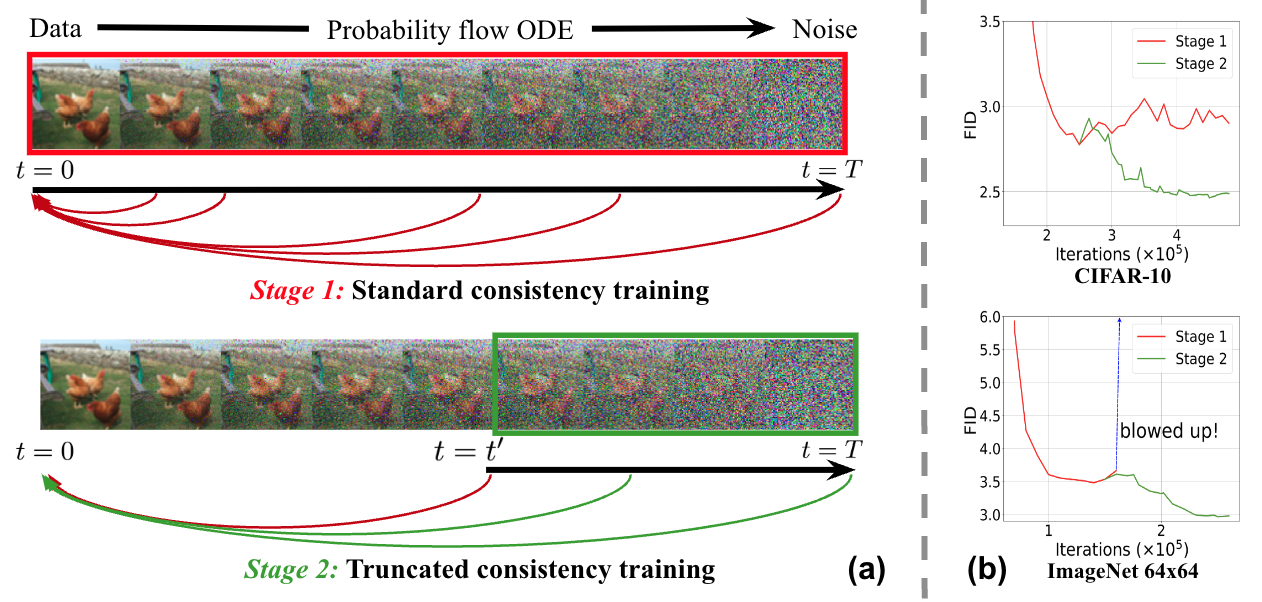

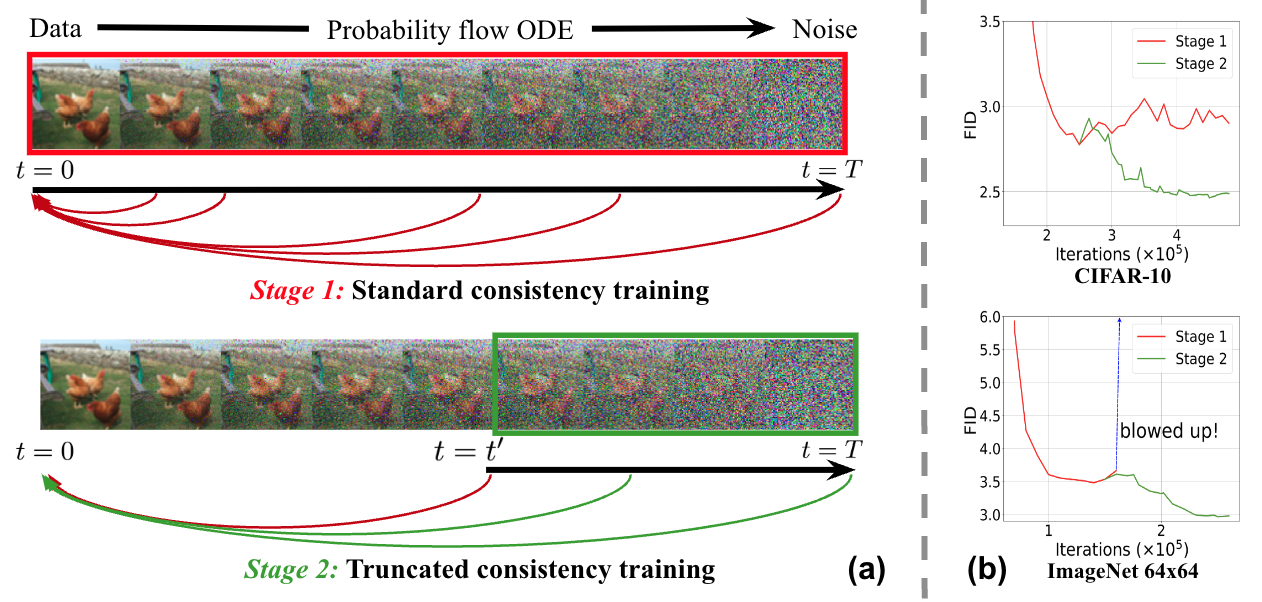

Conceptual Illustration of TCM

TCM training consists of two stages. The first stage is the standard consistency training. The second stage is the truncated-time training, which is conducted over a truncated time range.

Here, stage-1 model serves as a boundary condition for stage-2 training. This ensures that the truncated consistency models do not collapse to a trivial solution and learn data distribution.

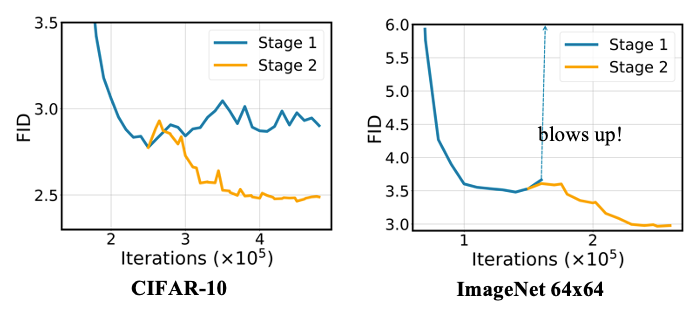

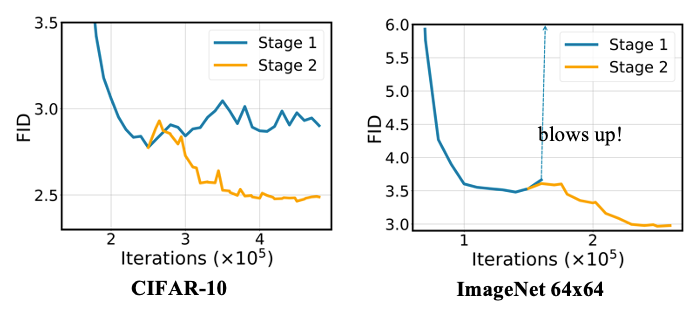

FID Improvement of TCM

Truncated training improves the FID over the standard consistency training.

Comparison with Other Methods

TCM achieves better FID than the previous best consistency models, iCT and iCT-deep, on CIFAR-10 and ImageNet 64x64 datasets, establishing a new state-of-the-art among consistency models.

One-step and Two-step Generation Results

Citation

BibTex:

@misc{lee2024truncatedconsistencymodels,

title={Truncated Consistency Models},

author={Sangyun Lee and Yilun Xu and Tomas Geffner and Giulia Fanti and Karsten Kreis and Arash Vahdat and Weili Nie},

year={2024},

eprint={2410.14895},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2410.14895},

}

TCM training consists of two stages. The first stage is the standard consistency training. The second stage is the truncated-time training, which is conducted over a truncated time range.

Here, stage-1 model serves as a boundary condition for stage-2 training. This ensures that the truncated consistency models do not collapse to a trivial solution and learn data distribution.

TCM training consists of two stages. The first stage is the standard consistency training. The second stage is the truncated-time training, which is conducted over a truncated time range.

Here, stage-1 model serves as a boundary condition for stage-2 training. This ensures that the truncated consistency models do not collapse to a trivial solution and learn data distribution.

Truncated training improves the FID over the standard consistency training.

Truncated training improves the FID over the standard consistency training.

TCM achieves better FID than the previous best consistency models, iCT and iCT-deep, on CIFAR-10 and ImageNet 64x64 datasets, establishing a new state-of-the-art among consistency models.

TCM achieves better FID than the previous best consistency models, iCT and iCT-deep, on CIFAR-10 and ImageNet 64x64 datasets, establishing a new state-of-the-art among consistency models.